Autodesk released the Beta of their

123D Catch 3D scanning software. I figured I’d take it for a spin. It’s supposed to leverage the “power and speed of cloud computing” by taking a number of pictures shot around an object, uploading (to the cloud!) and processing into a 3D mesh.

I do want to say that this is profoundly cool software and any quibbles (of which there are a few) I have are dwarfed by the fact that it’s a free way to generate a 3D mesh using only a digital camera. That’s amazing and Autodesk should be proud. However…

My first complaint is that the only manual consists of

four short youtube videos. This is all too common so it’s not only Autodesk’s fault. But seriously, you can’t get a couple of paragraphs and screen captures together? Of course I couldn’t be bothered doing the same, so you just get some random pictures as I go along. Their help forum is empty right now but I figure it will get going as people try the software.

This is the first test subject, a dusty ceramic chicken. The video was clear that a flash is not supposed to be used. This picture is actually brighter than the original as I deleted the darker original during a tantrum earlier…

A series of pictures, 30MB total, were uploaded.

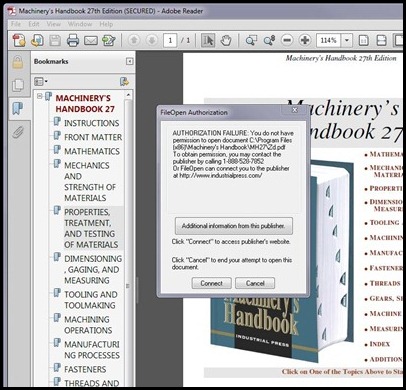

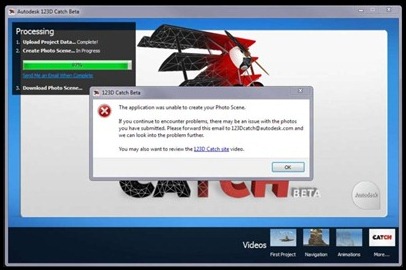

After 15 minutes I was greeted with this notice.

I checked the pictures and they seemed much darker than what I had uploaded. So I bumped up the brightness and uploaded them again (15 more minutes). I think it would be good if the software had a preliminary check of the pictures to determine if they are below a threshold for brightness before uploading… I have 6Mbs DSL but my upload speed is only about .4Mbps. This is the #1 problem with cloud computing, at least on the consumer level, slow upload times.

Success! As you can see not only was the chicken captured, but parts of my desk…

But I wasn’t done. I had to select the chicken and upload it again to generate a “medium” mesh, but that only took 5 minutes.

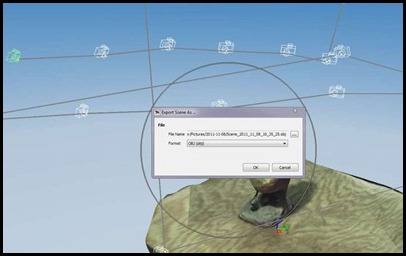

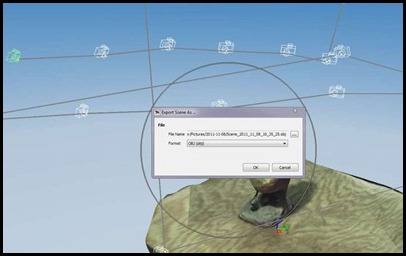

Thankfully the software allows export in .DWG and .OBJ formats. They could have gone with something that required an Autodesk product but they didn’t. I chose .OBJ and opened the file in Rhino.

It’s a mesh!

Trimming the mesh shows a pretty low resolution scan. Notice the magenta edges – I used Rhino to show the “naked” edges. The mesh is pretty good and would require little work to make “watertight”.

Not content with the detail I made a new mesh, selecting the maximum resolution. This only took about 6 minutes to process. It certainly does look more like a chicken. I should note that the texture came through in Rhino as well.

The only problem is that it hides the low level of detail, or rather makes it seem more detailed than it is.

So I decided to try scanning a pistol grip. I had previously painted it flat white for an attempt at laser scanning. This is the level of brightness that I uploaded. I took the picture outside (It’s overcast) and I figured it would work…

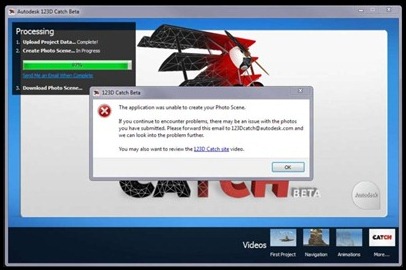

After 30 minutes I was notified it had failed… Arghh..

Again the pictures seem much darker in the software.

So I bumped up the brightness and contrast in Paint.net. I also reduced the picture size so that upload would take less time.

Success after only 15 minutes…

Not a bad mesh.

The nice thing about having the texture is that it will make it easier to trim the mesh away. See what I mean about the texture making the mesh seem more detailed than it actually is? The checkering of the grip just didn’t come through in the mesh at all but seems to exist with the texture overlaid.

I’m sure as I play with the software I’ll learn (although I’d love to have some help from Autodesk in the form of more detailed instructions…) what it needs in the way of picture quality and brightness as well as how to capture the maximum detail. Whether it’s enough for high resolution scans of small objects remains to be seen.